Today I started categorising all my transactions on Up Bank with the help of AI agents.

I’m going to outline my approach for others interested in doing the same thing, to hopefully save you guys time and energy. If you aren’t tech inclined, don’t worry at all here’s the link to my code to make it super simple, just follow the instructions and ask me for any help!

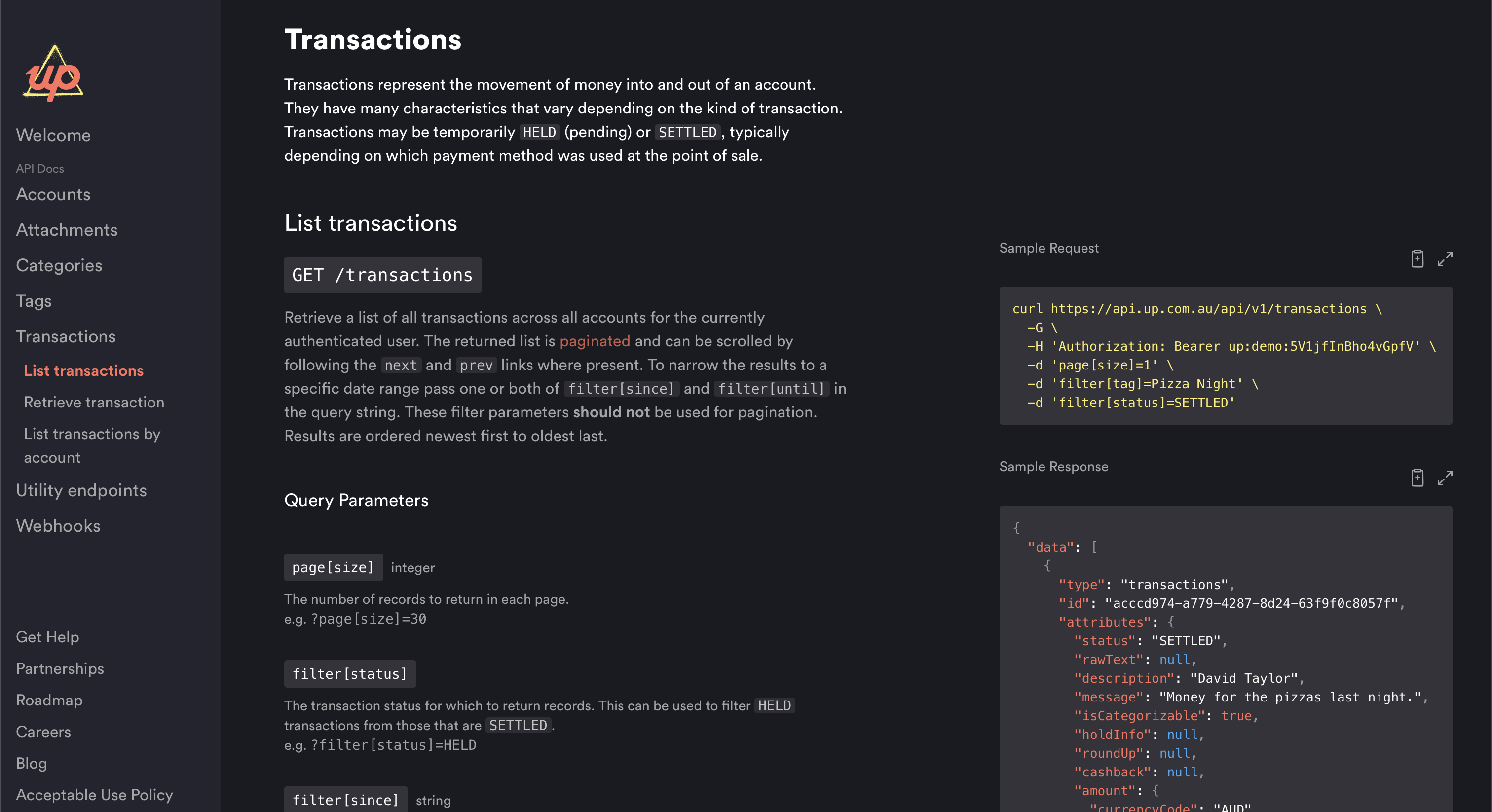

I began by downloading all my Up transaction data, Up thankfully exposes their API which makes it super convenient to download all of your data:

I then began planning what categories and format I want my data in. Basically with the aid of Gemini deep research I created the following schema:

{

"output_format": {

"functional": {

"level_1_flow": "Enum: [inflow, outflow, transfer]",

"level_2_primary": "Enum: [income, transfer_in, transfer_out, loan_payments, bank_fees, entertainment, food_and_drink, general_merchandise, home_improvement, medical, personal_care, general_services, transportation, travel, rent_and_utilities]",

"level_3_granular": "String: [Matches subcategory list e.g., dining_out, groceries, fuel, etc.]"

},

"context": {

"intent": "Enum: [essential, discretionary, financial_future]",

"recurrence": "Enum: [recurring_fixed, recurring_variable, one_off]",

"tax_status": "Enum: [tax_deductible, not_deductible, tax_payment]",

"life_event": "Enum: [moving, wedding, travel, medical_emergency, education, none]"

},

"behavioral": {

"kakeibo_category": "Enum: [survival, optional, culture, extra]",

"is_impulse_suspect": "Boolean",

"social_context": "Enum: [solo, date, family, friends, work_colleagues]",

"behavioral_tags": "Array of Strings"

},

"enrichment": {

"ultimate_creditor": "String: [Extracted merchant name]",

"platform": "Enum: [online, in_store, service]",

"confidence": "Number: [0.0 - 1.0]"

},

"reasoning": "String: [Brief explanation of classification logic]"

}

}

for example the following Up transaction:

{

"id": "f4b139b3-2399-4ef2-ae42-15e266e298dd",

"description": "Miss Ping’s",

"amount": -83.59,

"currency": "AUD",

"created_at": "2025-12-11 12:34:32+11:00",

"raw_text": "Miss Ping's The Glen Glen",

"message": "",

"up_category": "restaurants-and-cafes"

}

results in the output:

{

"functional": {

"level_1_flow": "outflow",

"level_2_primary": "food_and_drink",

"level_3_granular": "dining_out"

},

"context": {

"intent": "discretionary",

"recurrence": "one_off",

"tax_status": "not_deductible",

"life_event": "none"

},

"behavioral": {

"kakeibo_category": "optional",

"is_impulse_suspect": false,

"social_context": "friends",

"behavioral_tags": [

"restaurant",

"thai_cuisine",

"group_dining"

]

},

"enrichment": {

"ultimate_creditor": "Miss Ping's Thai Eatery",

"platform": "in_store",

"confidence": 0.98

},

"reasoning": "The transaction at 'Miss Ping's' in 'The Glen' is identified as a Thai restaurant. The amount ($83.50) is typical for group meal or a significant dinner out, placing it in discretionary 'food_and_drink'."

}

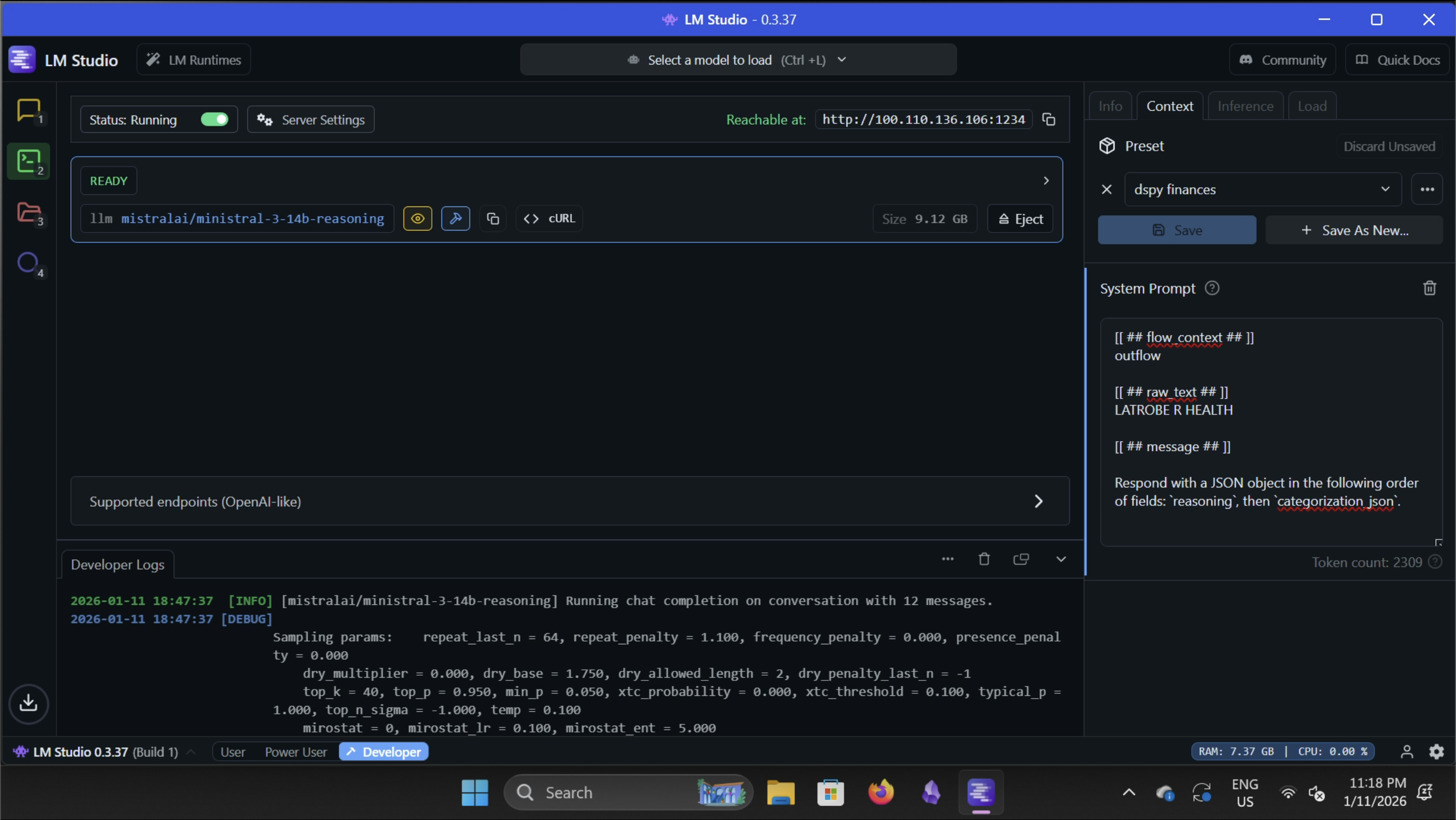

I initially started by using LMStudio on my PC to locally categorise all my transactions.

I used Ministral-3, the 14b parameter version. I have an RTX 4070 super and I think this is the best local model currently that offers a decent tradeoff between speed and intelligence.

I also used DSPy (automatic prompt optimiser) to try and create an optimised system prompt, and enforced structured outputs in the following format:

{

"type": "object",

"properties": {

"functional": {

"type": "object",

"properties": {

"level_1_flow": {

"type": "string",

"enum": [

"inflow",

"outflow",

"transfer"

]

},

"level_2_primary": {

"type": "string",

"enum": [

"income",

"transfer_in",

"transfer_out",

"loan_payments",

"bank_fees",

"entertainment",

"food_and_drink",

"general_merchandise",

"home_improvement",

"medical",

"personal_care",

"general_services",

"transportation",

"travel",

"rent_and_utilities"

]

},

"level_3_granular": {

"type": [

"string",

"null"

]

}

},

"required": [

"level_1_flow",

"level_2_primary",

"level_3_granular"

]

},

"context": {

"type": "object",

"properties": {

"intent": {

"type": "string",

"enum": [

"essential",

"discretionary",

"financial_future"

]

},

"recurrence": {

"type": "string",

"enum": [

"recurring_fixed",

"recurring_variable",

"one_off"

]

},

"tax_status": {

"type": "string",

"enum": [

"tax_deductible",

"not_deductible",

"tax_payment"

]

},

"life_event": {

"type": "string",

"enum": [

"moving",

"wedding",

"travel",

"medical_emergency",

"education",

"none"

]

}

},

"required": [

"intent",

"recurrence",

"tax_status",

"life_event"

]

},

"behavioral": {

"type": "object",

"properties": {

"kakeibo_category": {

"type": "string",

"enum": [

"survival",

"optional",

"culture",

"extra"

]

},

"is_impulse_suspect": {

"type": "boolean"

},

"social_context": {

"type": [

"string",

"null"

],

"enum": [

"solo",

"date",

"family",

"friends",

"work_colleagues",

null

]

},

"behavioral_tags": {

"type": "array",

"items": {

"type": "string"

}

}

},

"required": [

"kakeibo_category",

"is_impulse_suspect",

"social_context",

"behavioral_tags"

]

},

"enrichment": {

"type": "object",

"properties": {

"ultimate_creditor": {

"type": "string"

},

"platform": {

"type": [

"string",

"null"

],

"enum": [

"online",

"in_store",

"service",

null

]

},

"confidence": {

"type": "number",

"minimum": 0,

"maximum": 1

}

},

"required": [

"ultimate_creditor",

"platform",

"confidence"

]

},

"reasoning": {

"type": "string"

}

},

"required": [

"functional",

"context",

"behavioral",

"enrichment",

"reasoning"

]

}

Initially, there were times the model would get things like the ‘inflow vs outflow’ property incorrect (e.g. thinking someone transferring me with the message parking was a fine and money coming out of my account) but I changed it so that the flow property was deterministic and done by checking for a negative dollar amount (E.g. $-anything would always be classified as outflow).

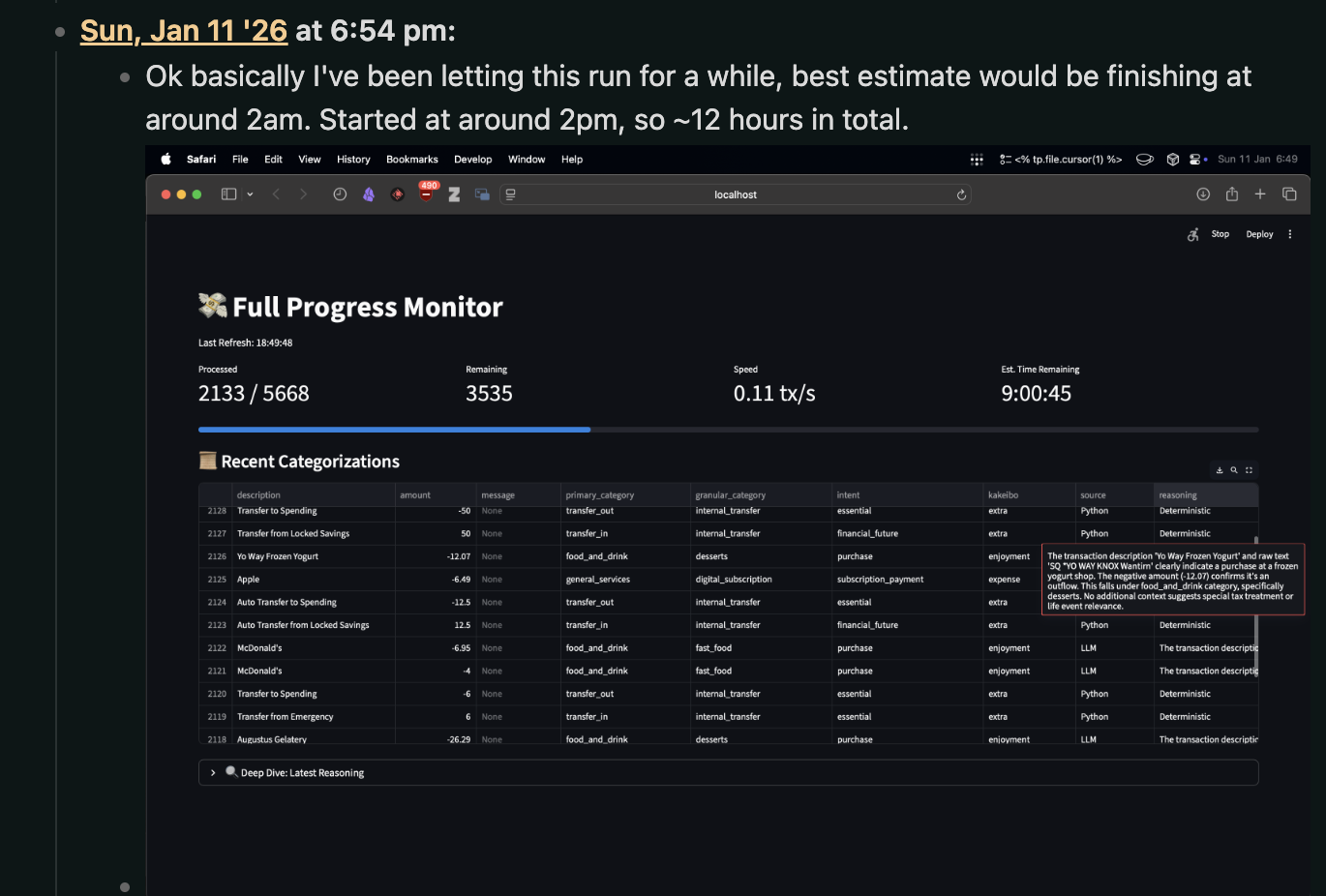

Categorising ~4000 transactions locally would’ve taken around 12 hours.

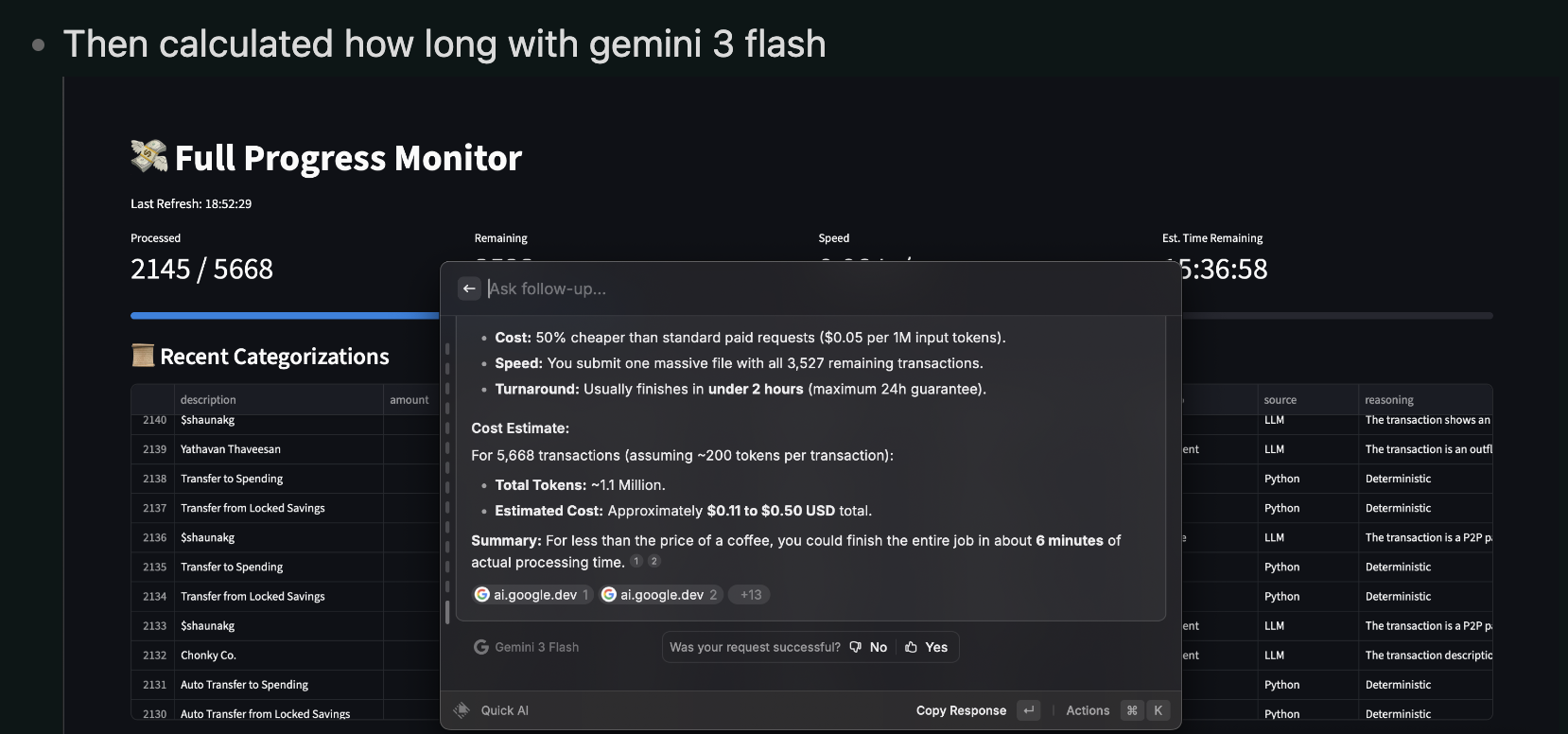

It was only after a while that I thought to check how fast and cheap it would be if I used Gemini-3 Flash.

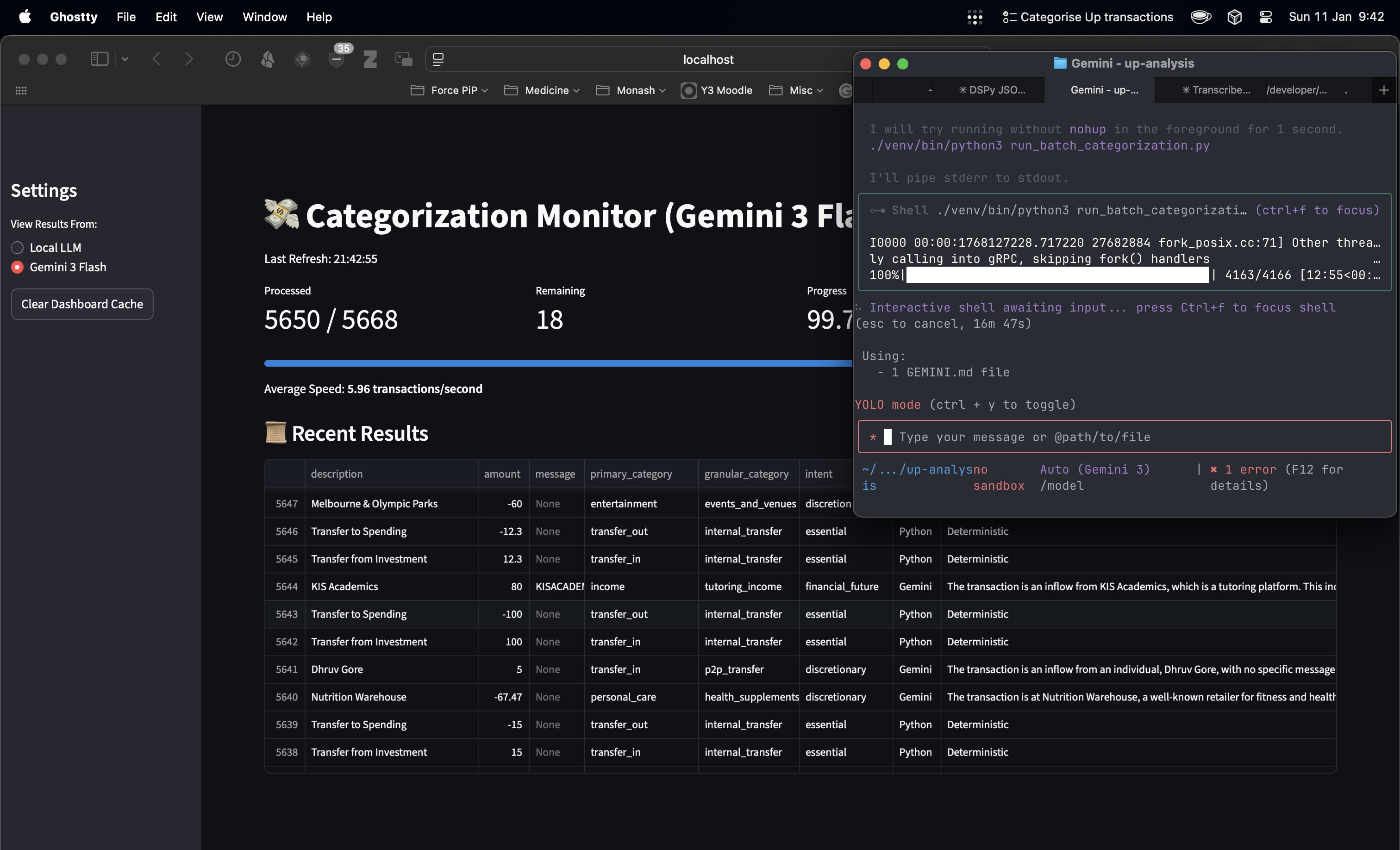

In the end I completely switched to using Gemini-3 Flash, I believe Google doesn’t train and collect data on paid API data so I didn’t mind the privacy risk. Categorising all my transactions took ~13 minutes in total and cost me ~$4.5 USD.

Overall happy with the outcome, I’ll probably do a separate post on the insights from my data and things I’d do differently. Up is really good I’d highly recommend it!!

Thanks for reading, hope you guys enjoyed. I will update this post tomorrow with a link so you guys can basically automatically do this for yourselves. Don’t forget to like, comment and subscribe :)))